& Construction

Integrated BIM tools, including Revit, AutoCAD, and Civil 3D

& Manufacturing

Professional CAD/CAM tools built on Inventor and AutoCAD

There’s a profound shift happening quietly—and not so quietly—at architecture firms around the globe, as AI-powered image generators are emerging that create and tune design concept renderings in seconds, using existing images and simple text commands.

“It’s something that has accelerated so quickly, and at a scale and a pace that is completely unprecedented. It’s almost like the cat’s out of the bag, and ‘Oh, the cat’s as big as the universe, and it’s never going back in the bag,’” says Josiah Platt, a partner at the Dallas office of the design consultancy Geniant.

What if an architect wants to explore what a mass timber mid-rise will look like at different heights, surrounded by trees, or seen in late afternoon sun through a high-powered Canon RF camera lens? Now, instead of assigning a junior associate or graphic designer to conjure scenarios using traditional software tools, firms like Geniant, Ankrom Moisan, and MVRDV are turning to text-to-image AI programs like Midjourney, DALL-E, and Stable Diffusion to generate photorealistic 2D renderings.

By uploading a reference image and typing a few carefully chosen descriptive phrases into an image generator like Midjourney, an architect or designer with a sufficient design vocabulary can churn out hundreds of renderings in hours to experiment with material choices, massing arrangements, select rooms and features, and light patterns, according to Michael Great, design director of architecture at Portland-based Ankrom Moisan. “What we’ve found is that we can create these variations very, very quickly, and then we can show them to the client and say, ‘Hey, which direction are you thinking the architecture should go?’”

Over the past year, Ankrom Moisan has used Midjourney for at least five commercial projects now on the boards. One of them, a massive multifamily housing project in northern California, leaned on the tool to integrate local code restrictions and satisfy the client’s desire to emulate the design vernacular of Sea Ranch, a modernist seaside community 100 miles north of San Francisco developed in the early 1960s by architect and planner Al Boeke. Translating the sharp, angular lines of the redwood- and glass-clad structures to a sprawling, self-contained housing complex was a bit of a head-scratcher, but Midjourney did the trick.

“Being able to quickly tie together all of the project parameters and design concepts into one image that’s understandable to a client and evokes the emotion and the vibe and the kind of aesthetic that we’re shooting for has been pivotal,” says Ramin Rezvani, a senior associate at Ankrom Moisan.

Renderings on Midjourney v6, the software’s latest update, can be incredibly sharp—sharp enough to fool unwitting clients into believing they represent buildings that already exist in the physical world. The potential of those images to draw on styles across design disciplines and historical periods, and that they can be manipulated to build on a theme, can lead to revelatory discoveries.

Hassan Ragab is an artist, interdisciplinary designer, and architect who spent four years as a computational designer for Martin Bros., a California-based construction firm that modeled and fabricated intricately articulated portions of the Lucas Museum of Narrative Art in Los Angeles. In summer 2022, he began playing with Midjourney as an artistic tool to create “a new architectural vocabulary” and explore how “AI understands heritage cities in Egypt,” he says.

His work quickly went viral and led to branding jobs for film studios, speaking engagements on AI applications in construction, and appearances in photography exhibitions, such as Xposure in the United Arab Emirates city of Sharjah. His relationship with Midjourney is complicated. “As an architect, you want control. And Midjourney is actually not about control at all,” he says. On the other hand, it has allowed him to test the expressive limits of the discipline. “Because that’s how you do it, if you want to explore something new: you have to break out of the boundary of being an architect.”

“What made Zaha Hadid ‘Zaha’ is that she was inspired by the avant-garde Russian artist Kazimir Malevich,” he says. “She let go of her architectural mind. And that’s what caused innovation. It’s the same for almost any architect: Calatrava, Gaudi, Gehry. That’s how you break out of the boundary. You use the technology to bring architecture into new worlds.”

Image generators can also serve a much more utilitarian function. “It’s almost like having a junior graphic artist on the team, sitting off to the side that you can ask for stuff. ‘Hey, I need to render an alarm clock quickly. Put it on a black background,’” Platt says.

One of Midjourney’s selling points is that it is extremely accessible. Even a novice can enter a few descriptive phrases into the program and generate a photorealistic building rendering. But creating a commercially viable image that accommodates project parameters and serves a client’s interests requires lexical fluency, technical understanding, and often a fair amount of trial and error.

“The idea is to be as descriptive as possible in terms of storytelling,” says Stephen Coorlas, president of an eponymous architecture firm in Chicago’s north suburbs who has been dubbed “the AI whisperer” for his YouTube experimentation with Midjourney workflows. Ad-libbing a hypothetical prompt, he said, “a cinematic photograph using a 60-millimeter camera, during a stormy afternoon, in a log cabin with modern stylings. Black accents, ambient lighting….”

As with many things, the details make all the difference in your results. Prompts need to be tuned for camera type, mood, time of day, and vantage point to achieve desired effects, Coorlas says. Other techniques include word arrangement based on priority, and adding character commands to control the importance of certain words over others, which can have a profound influence on outcomes.

Ragab compares prompt engineering to sketching, an iterative process that begins with a core idea and filters down to more granular ideas. “You don’t want to do everything all at once,” he says. “It starts with the first stroke, and then you build layers and layers upon it; you add several variations.”

Still, even for a senior architect or computational designer with a deep understanding of how buildings are designed, fabricated, and constructed, there are massive limitations to what the tools can understand and describe, dimensionally and at the component level. For example, how to render “a wood joint detail with a flitch beam, metal fasteners, and through-bolts,” Coorlas says. “I found a lot of dead ends in terms of being able to integrate it into my business.”

For now, renderings created in the Midjourney are, in essence, just pretty images, Rezvani says. The program can’t produce dimensionally accurate models, 3D depth maps, or design and construction documents. Once a rendering is created in Midjourney, it essentially becomes an artifact: Software modeling programs can’t translate the renderings to the 3D environment. What Midjourney can do, however, is produce conceptual images at a speed and level of stylistic diversity previously unimaginable.

For some, that’s a bitter pill to swallow: “There’s a subset of artists, technologists, designers, and architects that loathe the upcoming AI tool sets,” Platt says. “Because you’re effectively creating an algorithm that can make what feels like new art but is extremely derivative of prior art.”

Practitioners wary of AI text-to-image programs may need to adapt to compete with tech-forward practices introducing image generators into their workflows to trim costs, speed production, and appeal to clients who want greater sway in design decisions. As Midjourney continues to refine its diffusion models and architectural capabilities, recently developed AI programs and protocols—like PromeAI, Look X, Finch 3D, ControlNet, Comfy AI, and Stable Diffusion—are pushing the boundaries of machine-generated image creation even further.

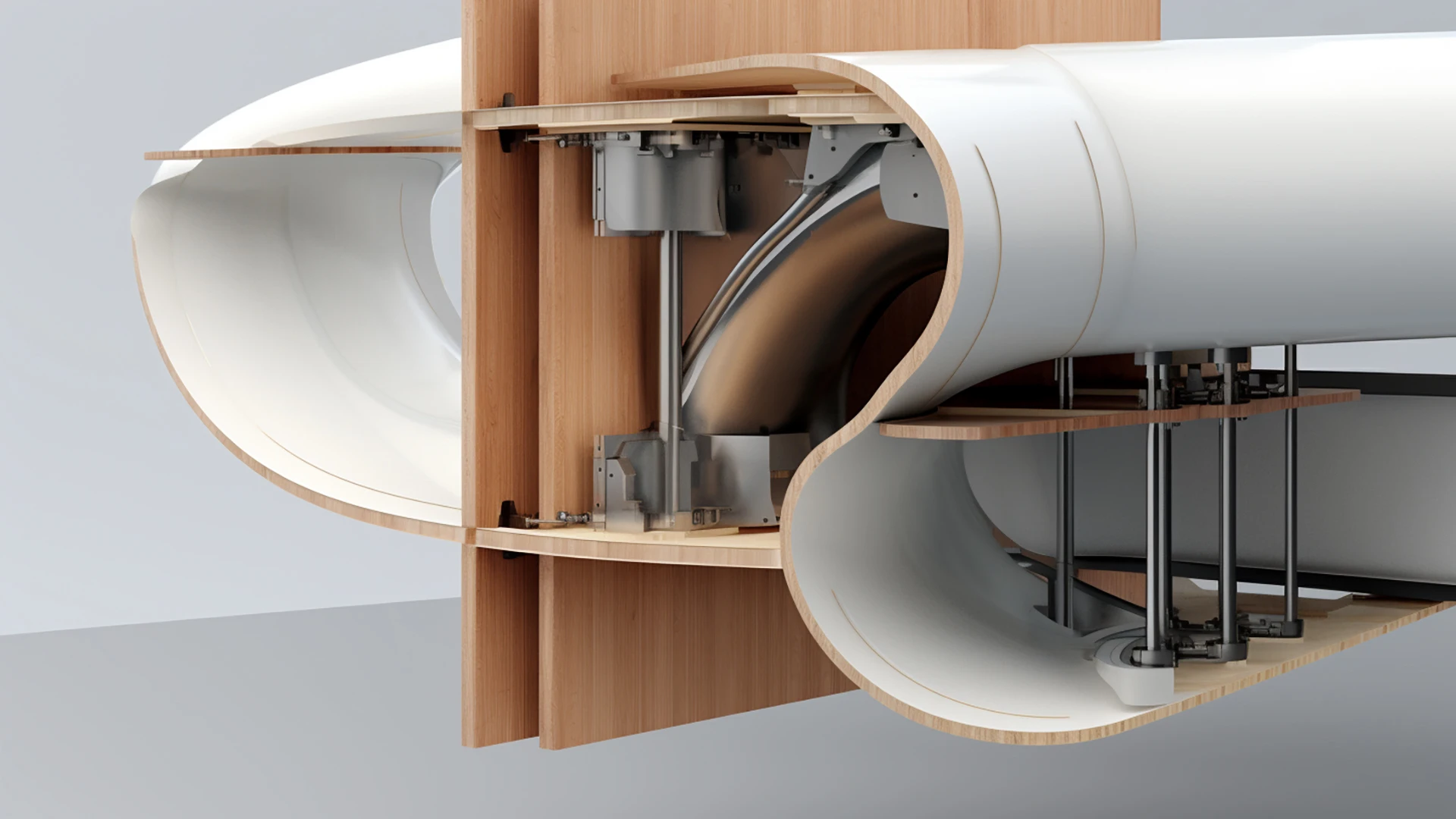

Developed for architects and computational designers, these tools, Ragab says, offer ways to make image generation more context-specific by incorporating proportioned dimensions from working 3D models into generative renderings, interpreting local building codes, and using uploaded screen recordings from 3D modeling programs like Autodesk 3ds Max as the basis for real-time rendering using text-based interface commands. Want your building to reflect the dynamism of the Sydney Opera House? Type the instruction in a prompt field and watch what happens as your model rotates 360 degrees.

“There’s a future where you can say, ‘I need a building. Here’s exactly where I’m going to put it. And here’s an artist I like or an architect that I like,’ and you get the whole [code compliant] building,” Platt says. “Every single material, bolt, everything... here are the city-planning documents for that plot. Fill it up, I can probably afford 80 floors. Like, legitimately, that’s coming for us.”

Jeff Link is an award-winning journalist covering design, technology and the environment. His work has appeared in Wired, Fast Company, Architect and Dwell.

Emerging Tech

Emerging Tech

D&M